1.Thinlens

"Focus is the art of choosing what to see and what to let blur into oblivion."

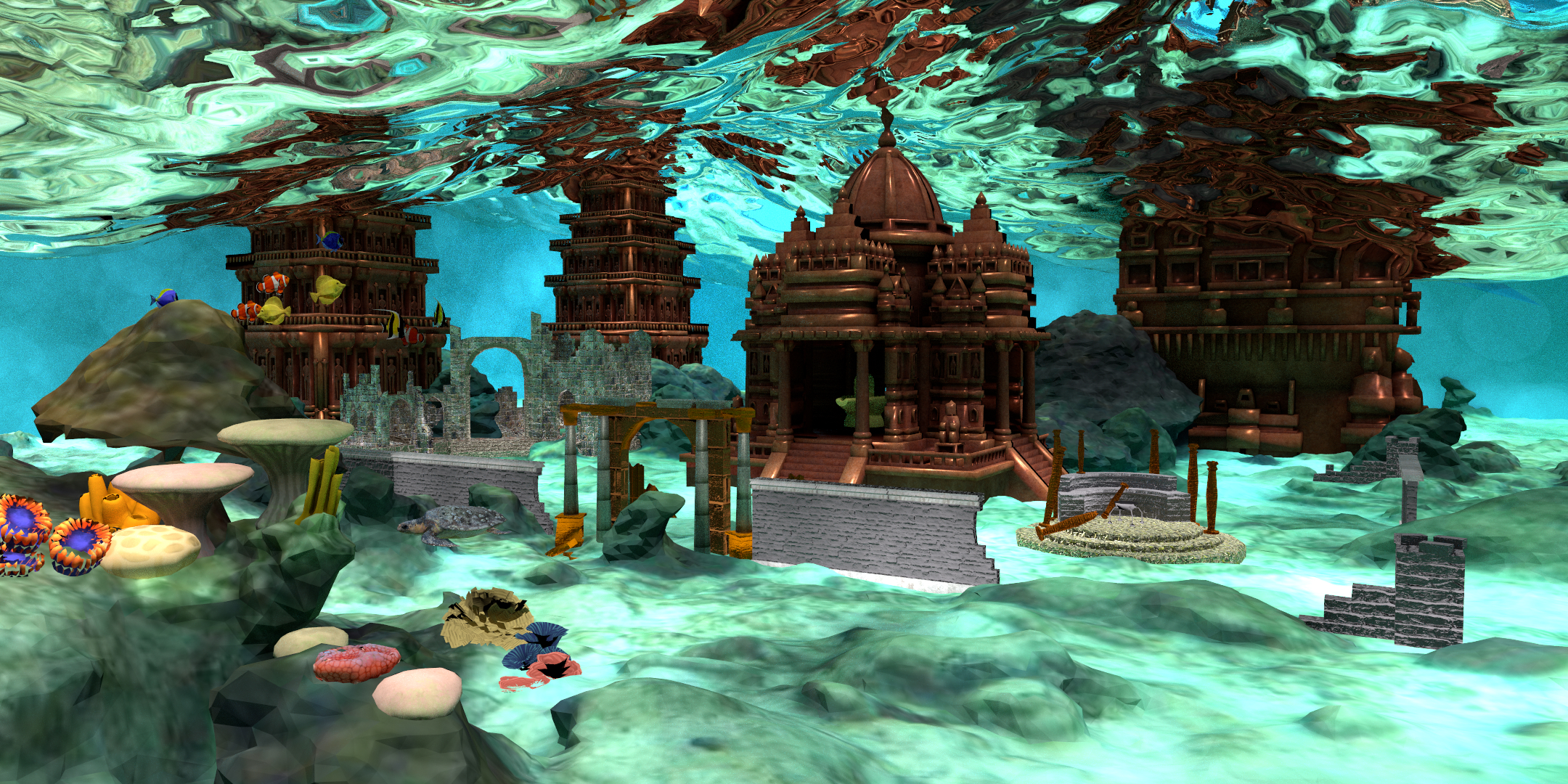

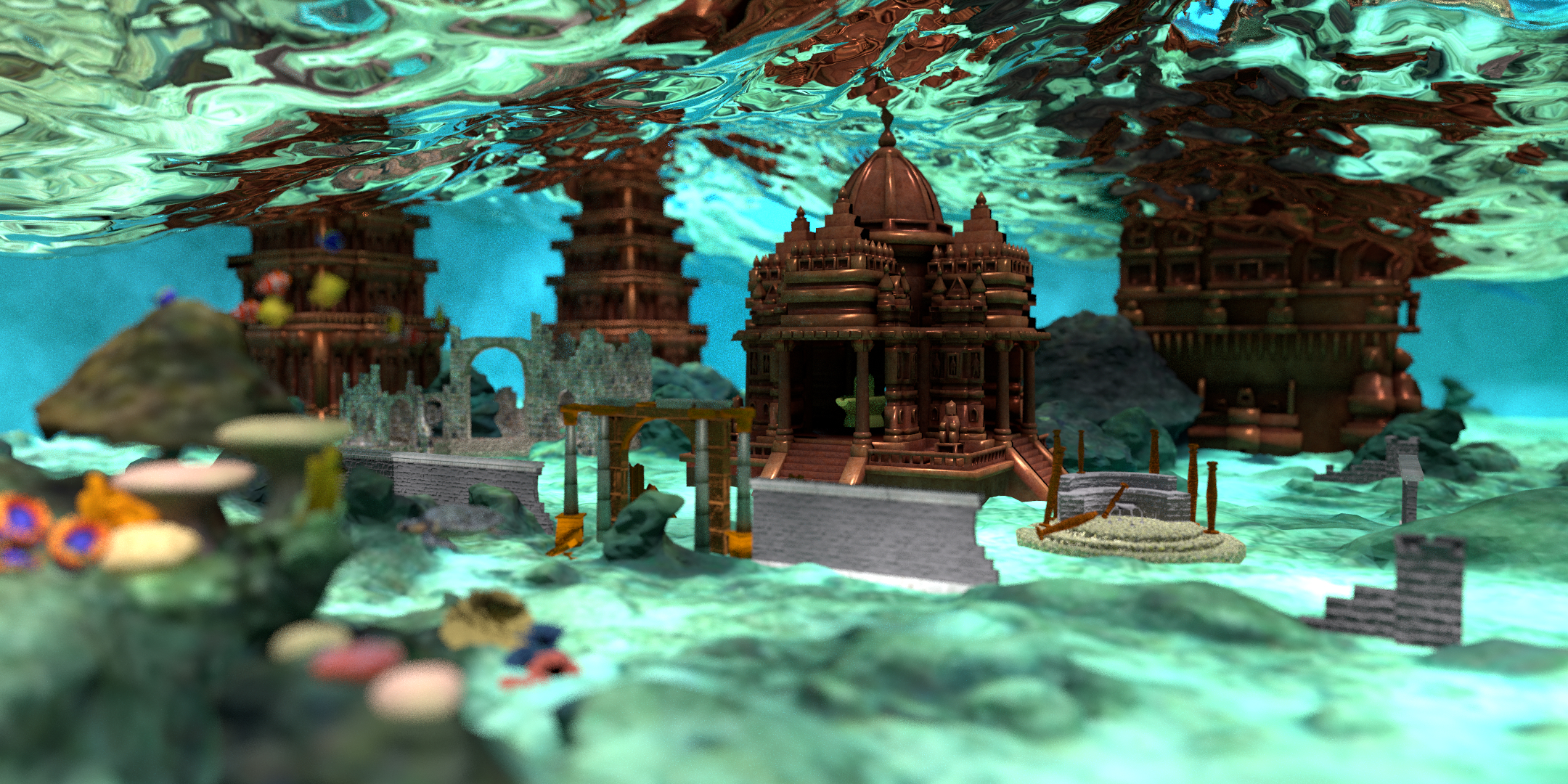

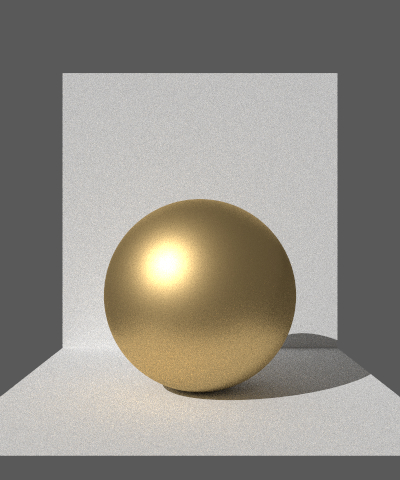

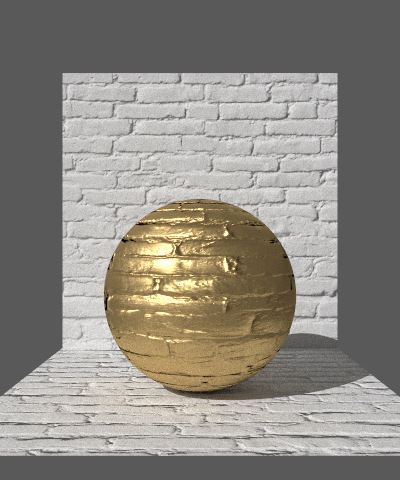

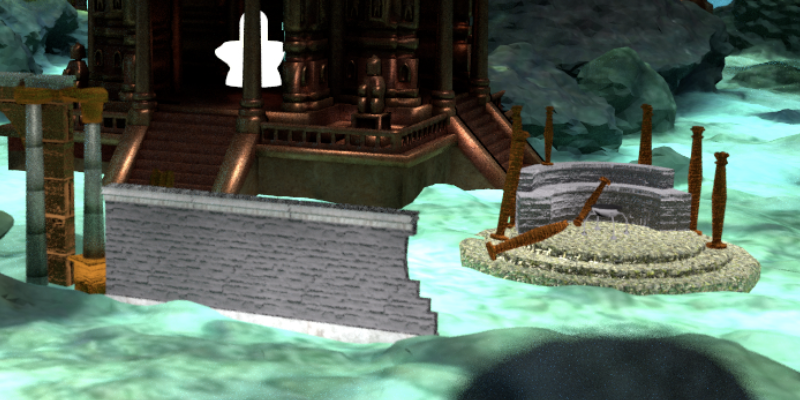

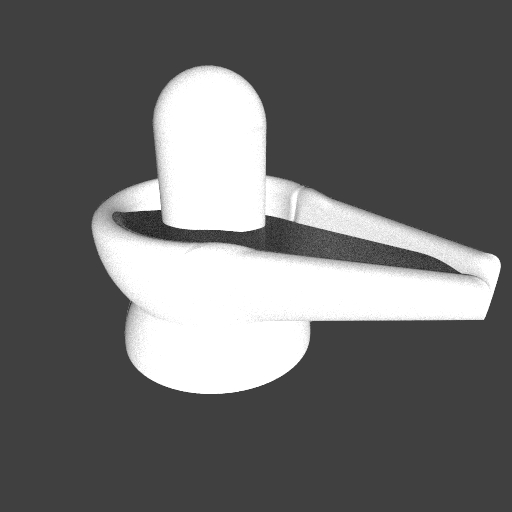

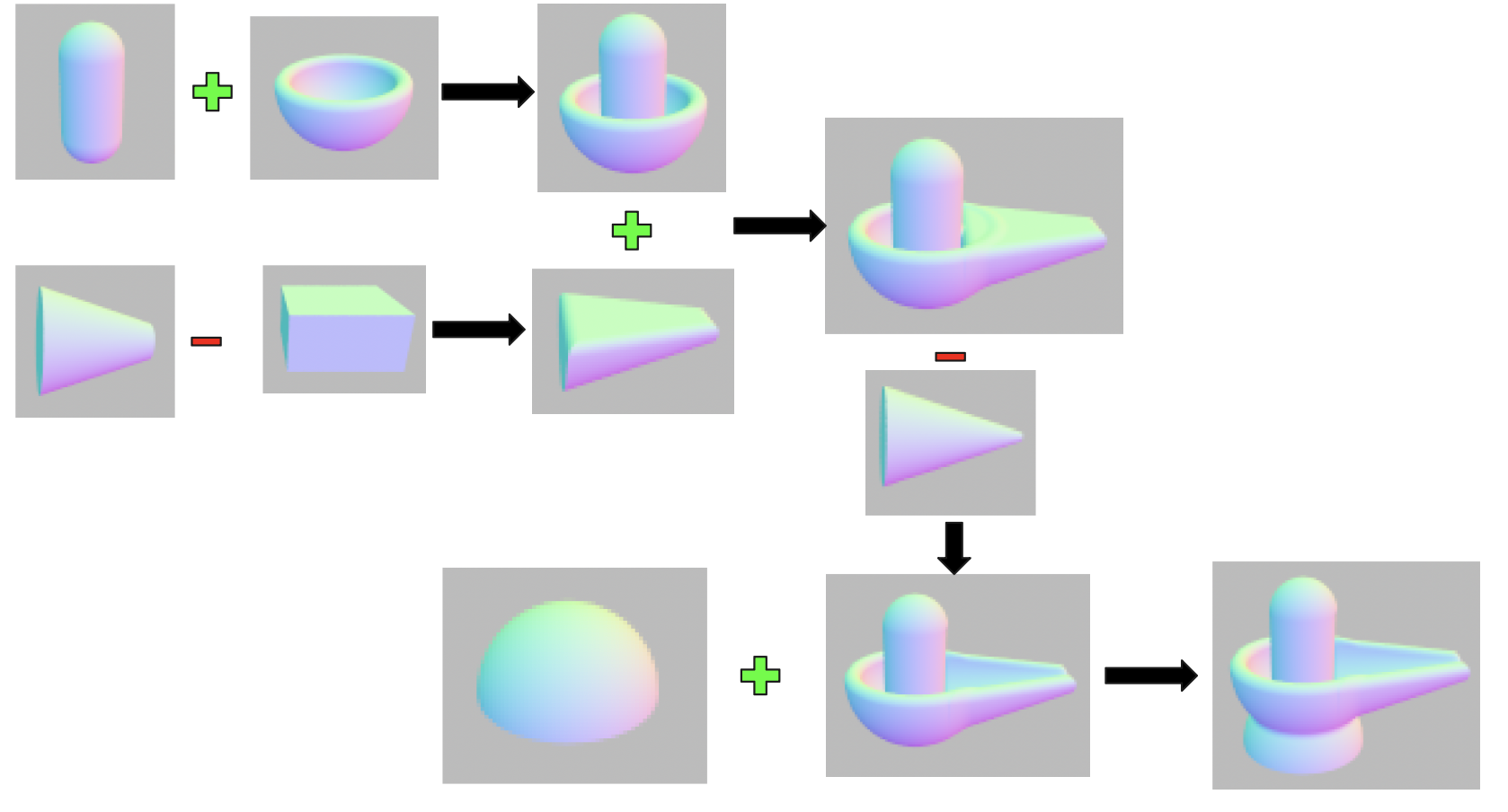

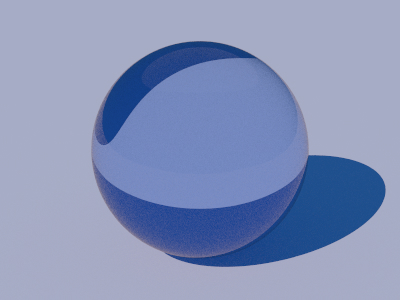

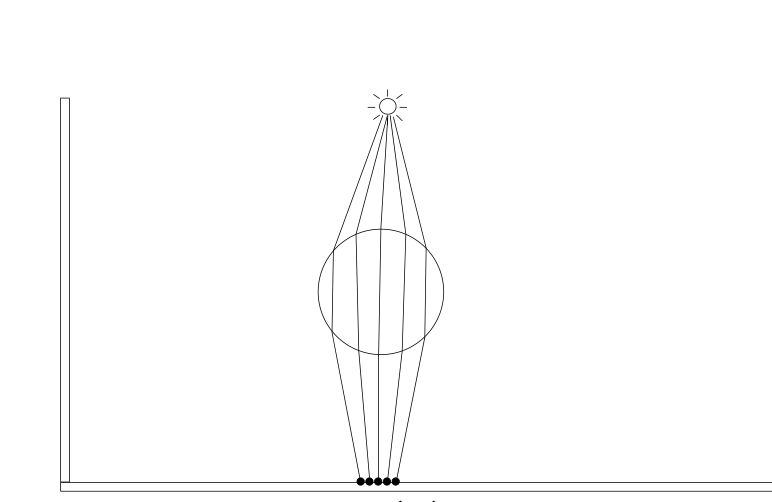

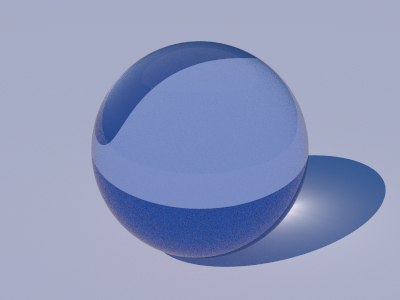

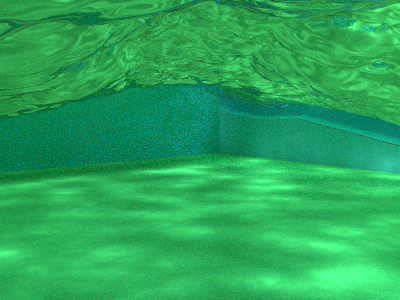

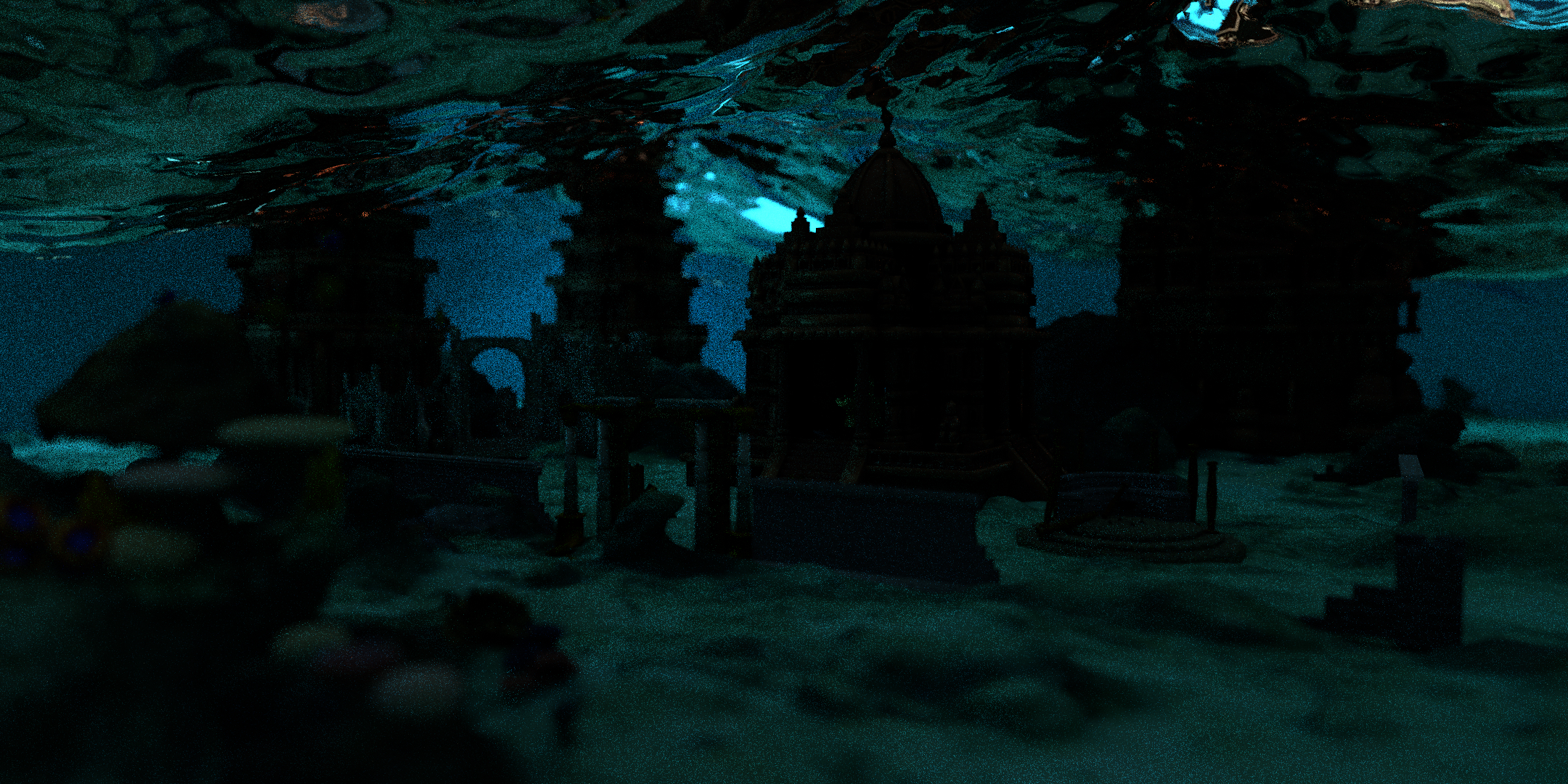

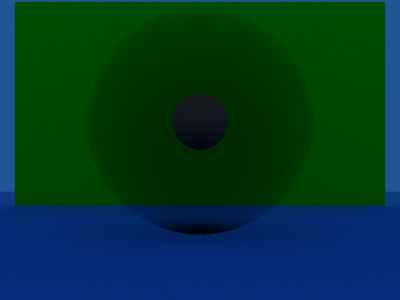

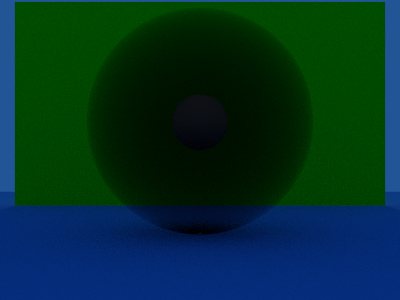

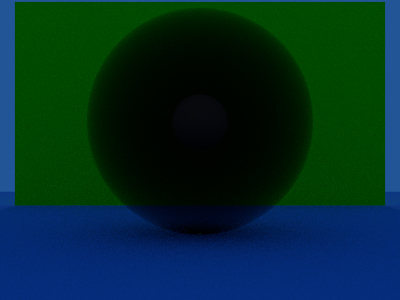

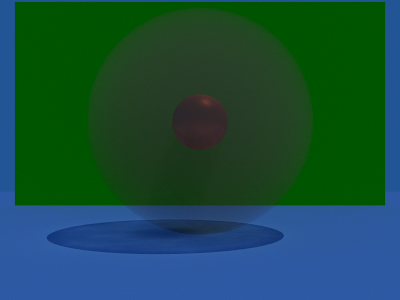

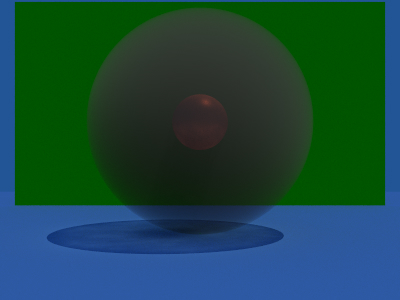

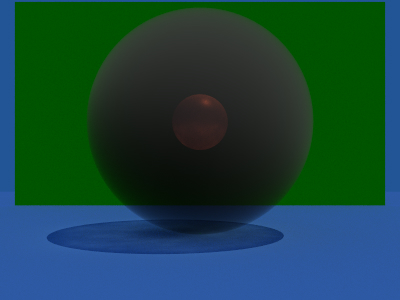

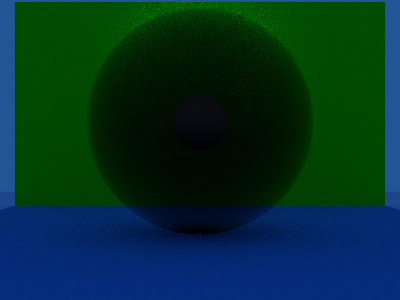

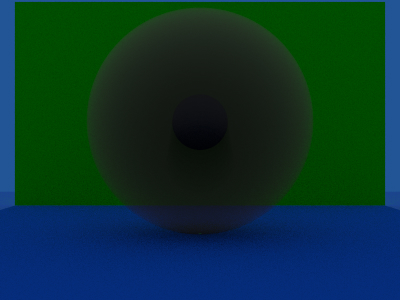

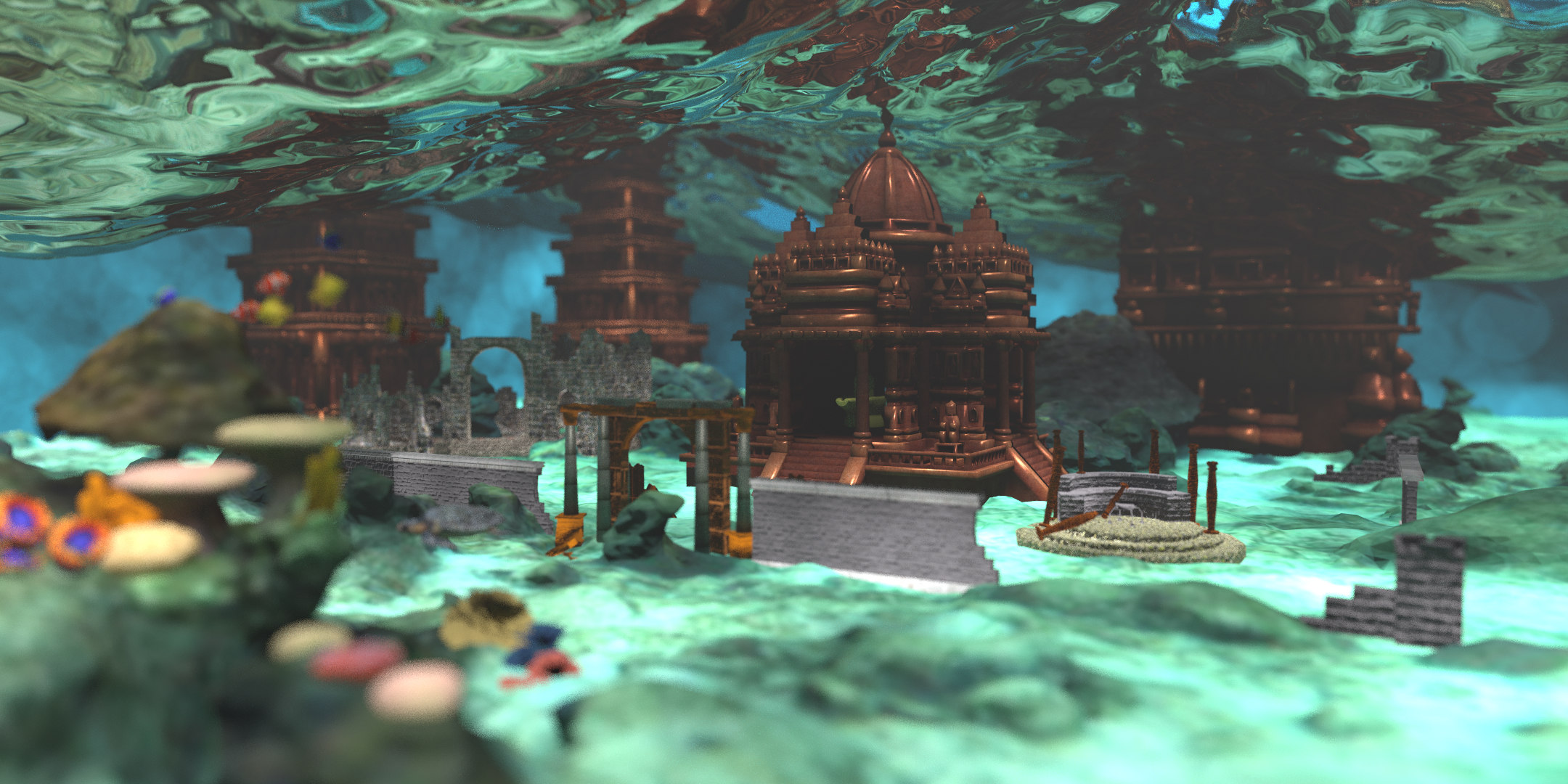

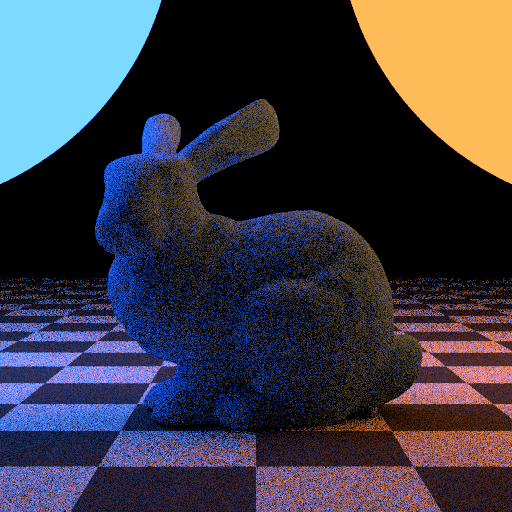

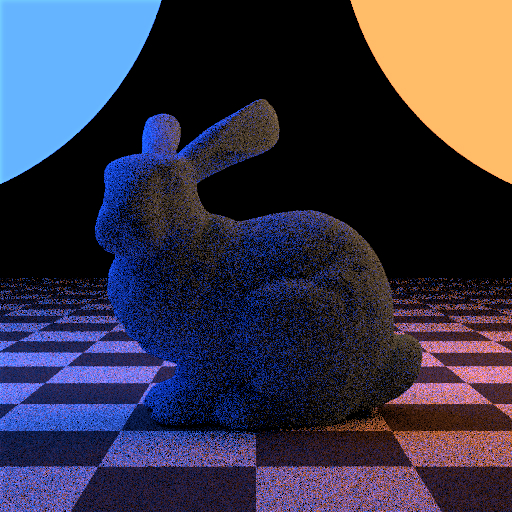

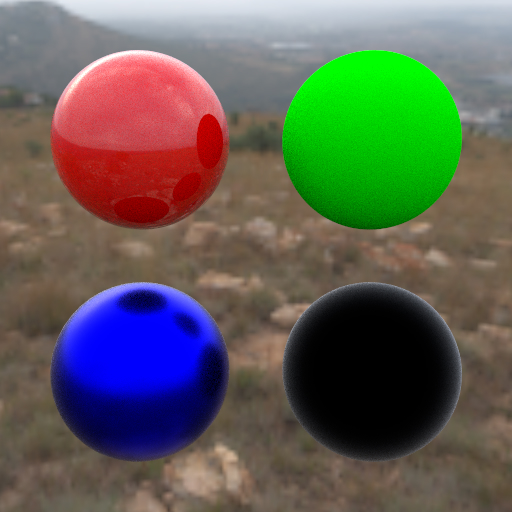

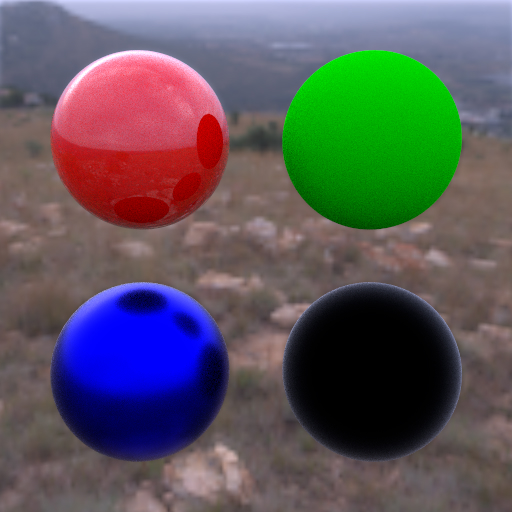

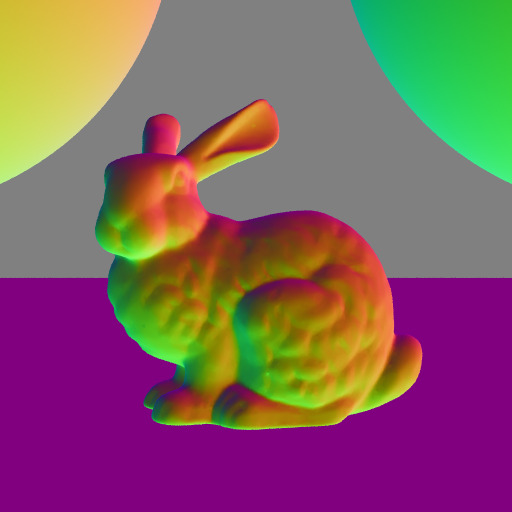

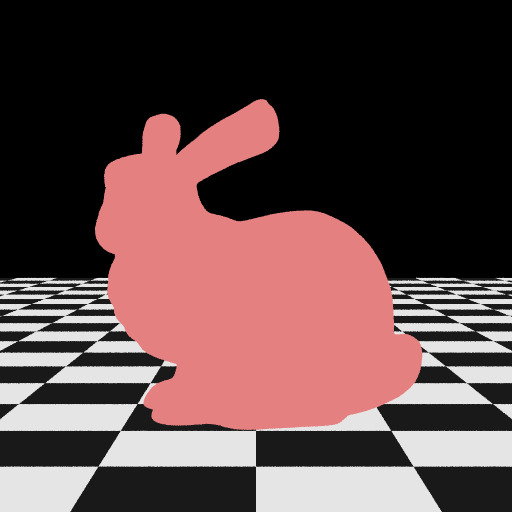

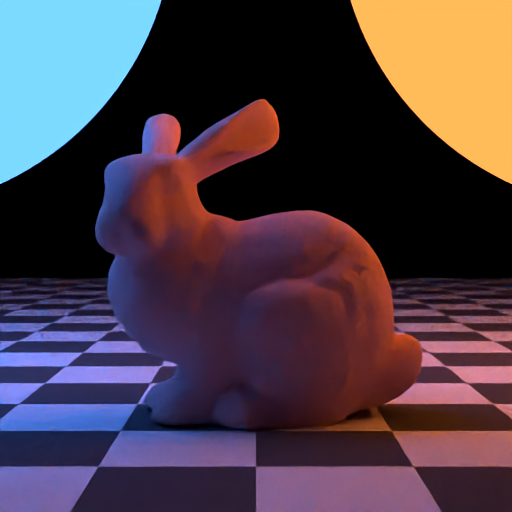

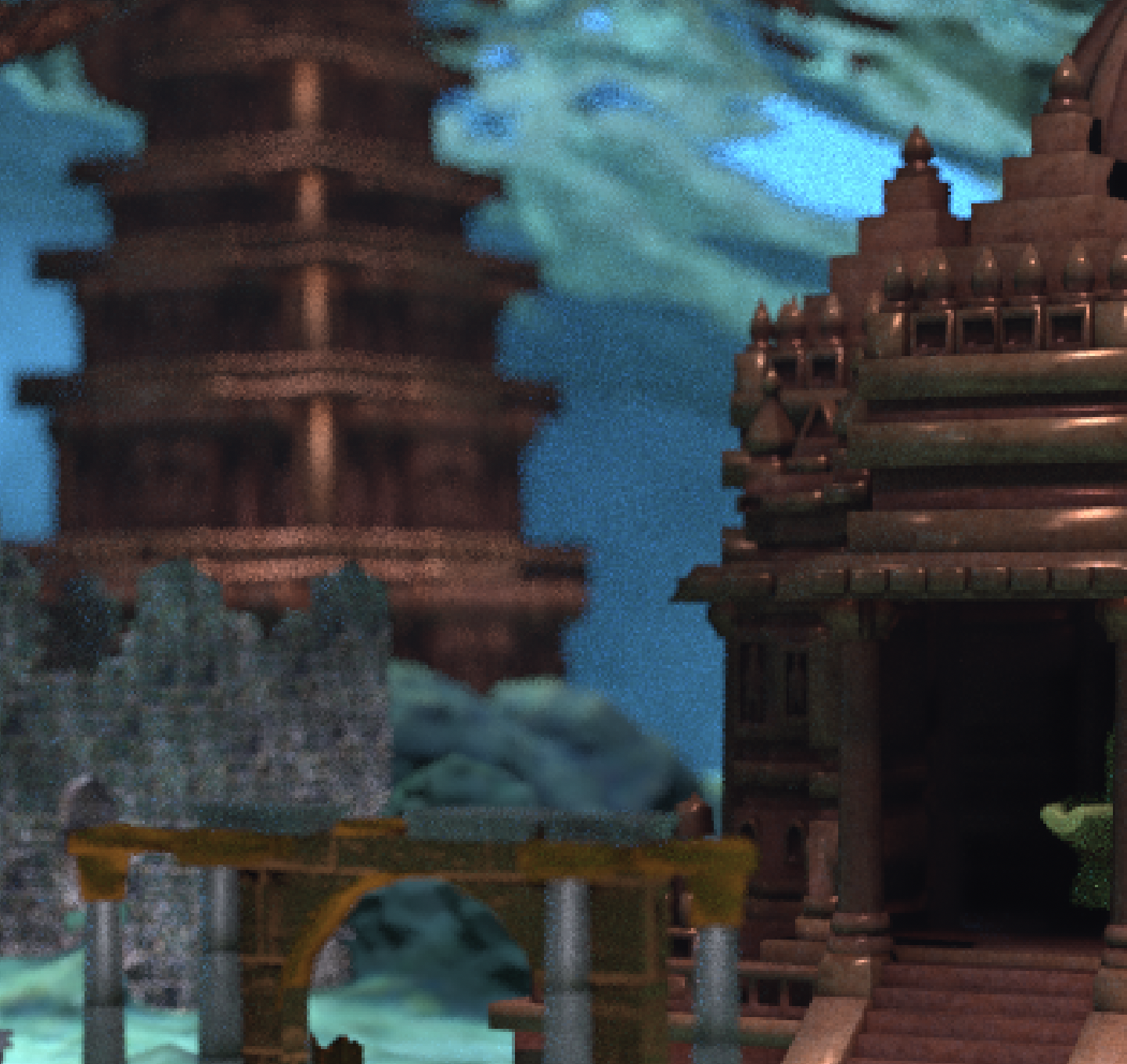

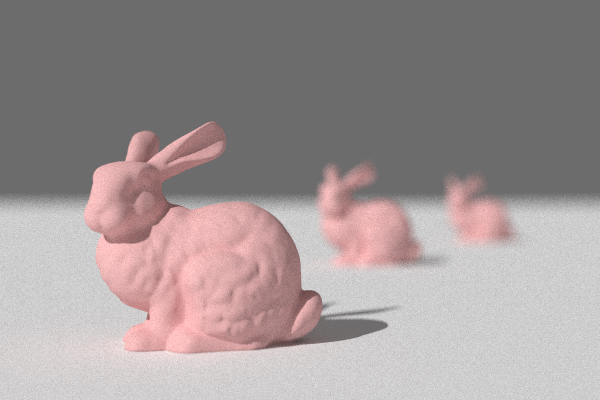

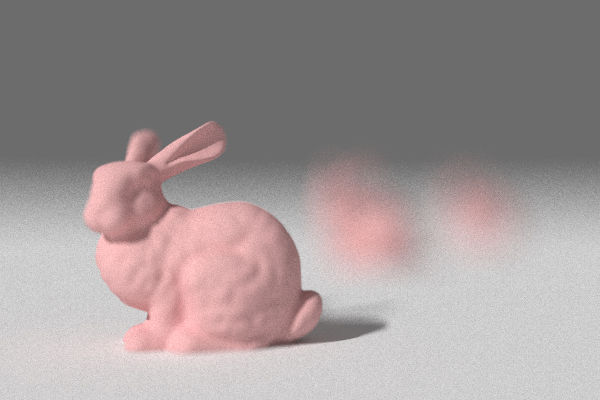

The thinlens models depth of field effect focusing on the objects in the focal plane and blurs that are out of the field. It models the fact that rays don't just pass through a single point but a aperture of certain radius. The aperture contols the blur effect which is defined in terms of fstop values in photography i.e. Aperture is defined in terms of focal length of lens. Higher the f_stop values less the aperture size. In figure above we can see effect of changing the aperture from f/32 to f/8 in the test scene. In our scene we have used this feature to focus on the center temple building and blur out objects that are very close to the lens like corals, fish and turtle. Below displayed is the effect of using this feature in our scene.

Code Files:- src/cameras/thinlens.cpp